Kafka描述

Kafka的功能描述目前网络上有非常形象的鸡蛋-篮子-消费者模型,这里还想说一个自己想的比喻,我们可以把Kafka看成一家食品物流公司,各个Producer就是食品生产工厂,比如猪肉生产厂家,鸡肉生产厂家,而zookeeper就是食品物流公司的主体,负责调度食物的仓储,运输,配送,各个broker是物流公司分布在不同地方的仓储点,每个仓储点能够存储不同种类的食物,一种食物被视为一个Topic,一个仓储点存储食物的一个仓库视为一个Topic的一个Part,而Consumer被视为一个个客户公司,他们消费特定种类的食品,由物流公司主题Zookeeper进行调度将仓储点的特定食物配送给他们。

Kafka下载

- Kafka官方下载

- JDK1.7+

Kafka安装与配置

- 解压下载的压缩包,配置环境变量如下:

KAFKA_HOME:Kafka根目录

- 在linux下使用bin下的sh命令脚本,windows下使用bin/windows文件夹下的bat脚本。

-

复制

config/server.properties文件为config/server1.properties和config/server2.properties- config/server1.properties

log.dirs=D:/tmp/kafka-logs

- config/server1.properties

broker.id=1

listeners=PLAINTEXT://:9093

log.dirs=D:/tmp/kafka-logs-1 - config/server2.properties

broker.id=2

listeners=PLAINTEXT://localhost:9094

log.dirs=D:/tmp/kafka-logs-2

- config/server1.properties

Kafka启动与停止

- 启动前切换到

%KAFKA_HOME%目录下 -

相关命令如下:

bin\windows\zookeeper-server-start.bat config\zookeeper.properties //启动Zookeeper

bin\windows\kafka-server-start.bat config\server.properties //启动Kafka server节点1

bin\windows\kafka-server-start.bat config\server1.properties //启动Kafka server节点2

bin\windows\kafka-server-start.bat config\server2.properties //启动Kafka server节点3

bin\windows\kafka-server-stop.bat //关闭Zookeeper

bin\windows\zookeeper-server-stop.bat //关闭Kafka server

- 测试启动情况

bin\windows\kafka-topics.bat --create --zookeeper localhost:2181 --replication-factor 2 --partitions 3 --topic JayJayJay //创建Topic JayJayJay,分区有3个

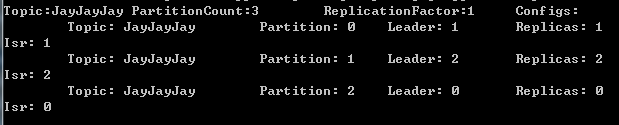

bin\windows\kafka-topics.bat --describe --zookeeper localhost:2181 --topic JayJayJay //查看Topic JayJayJay的情况,结果应有三行,如下图

bin\windows\kafka-console-producer.bat --broker-list localhost:9092 --topic JayJayJay //创建JayJayJay的生产者

bin\windows\kafka-console-consumer.bat --bootstrap-server localhost:9092 --from-beginning --topic JayJayJay //创建JayJayJay的消费者,之后在生产者窗口输入Message,可以再该消费者窗口中接收到

Java调用

Maven引用:

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<groupId>com.upa</groupId>

<artifactId>kafka_java</artifactId>

<version>1.0-SNAPSHOT</version>

<dependencies>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>1.0.0</version>

</dependency>

</dependencies>

</project>

Producer

import org.apache.kafka.clients.producer.*;

import java.util.Properties;

public class MessageProducer {

private static Properties getProperties() throws Exception{

Properties properties = new Properties();

properties.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "localhost:9092");

properties.put(ProducerConfig.ACKS_CONFIG, "all");

properties.put(ProducerConfig.RETRIES_CONFIG, 0);

properties.put(ProducerConfig.BATCH_SIZE_CONFIG, 16384);

properties.put(ProducerConfig.LINGER_MS_CONFIG, 1);

properties.put(ProducerConfig.BUFFER_MEMORY_CONFIG, 33554432);

properties.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringSerializer");

properties.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, "org.apache.kafka.common.serialization.StringSerializer");

return properties;

}

/**

* @Author: Jay

* @Description: 向特定topic发送消息

* @Date: 15:32 2018/1/11

*/

private static void example1() throws Exception{

Producer<String, String> producer = new KafkaProducer<String, String>(getProperties());

for (int i = 0; i <= 100; i++){

producer.send(new ProducerRecord<String, String>("JayJayJay", Integer.toString(i), Integer.toString(i)));

}

producer.close();

}

/**

* @Author: Jay

* @Description: send消息之后调用get会造成阻塞,如果消息发送失败会抛异常

* @Date: 15:32 2018/1/11

*/

private static void example2() throws Exception{

Producer<String, String> producer = new KafkaProducer<String, String>(getProperties());

ProducerRecord record = new ProducerRecord<String, String>("JayJay", "Example2_1", "Value2");

System.out.println(producer.send(record).get().offset());

ProducerRecord record2 = new ProducerRecord<String, String>("Jay", "Example2_2", "Value3");

System.out.println(producer.send(record2).get().partition());

producer.close();

}

/**

* @Author: Jay

* @Description: 无阻塞且要对结果进行处理

* @Date: 15:34 2018/1/11

*/

private static void example3() throws Exception{

Producer<String, String> producer = new KafkaProducer<String, String>(getProperties());

ProducerRecord record = new ProducerRecord<String, String>("Jay", "Example3_1", "Value3_1");

producer.send(record, new Callback() {

public void onCompletion(RecordMetadata recordMetadata, Exception e) {

if (e != null){

e.printStackTrace();

} else{

System.out.println(recordMetadata.topic());

}

}

});

producer.close();

}

public static void main(String args[]) throws Exception{

example1();

}

}

Consumer

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.apache.kafka.clients.consumer.ConsumerRecords;

import org.apache.kafka.clients.consumer.KafkaConsumer;

import org.apache.kafka.clients.consumer.OffsetAndMetadata;

import org.apache.kafka.common.PartitionInfo;

import org.apache.kafka.common.TopicPartition;

import org.apache.kafka.common.internals.Topic;

import java.util.*;

public class MessageConsumer {

/**

* @Author: Jay

* @Description: 默认情况下相同group_id的consumer只会收到一个Message

* @Date: 16:01 2018/1/11

*/

private static Properties getProperties() throws Exception{

Properties properties = new Properties();

properties.put("bootstrap.servers", "localhost:9092");

properties.put("group.id", "group1");

properties.put("group.name", "g3");

properties.put("enable.auto.commit", "true");

properties.put("auto.commit.interval.ms", "1000");

properties.put("key.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

properties.put("value.deserializer", "org.apache.kafka.common.serialization.StringDeserializer");

return properties;

}

/**

* @Author: Jay

* @Description: 从Kafka接受Message

* @Date: 15:42 2018/1/11

*/

private static void example1() throws Exception{

KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(getProperties());

consumer.subscribe(Arrays.asList("Jay"));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(100);

System.out.println(records.count());

for (ConsumerRecord<String, String> record : records)

System.out.printf("offset = %d, key = %s, value = %s%n", record.offset(), record.key(), record.value());

}

}

private static void example2() throws Exception{

final int minBatchSize = 50;

KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(getProperties());

consumer.subscribe(Arrays.asList("Jay"));

List<ConsumerRecord<String, String>> buffer = new ArrayList<ConsumerRecord<String, String>>();

while (true) {

ConsumerRecords<String, String> records = consumer.poll(100);

for (ConsumerRecord<String, String> record : records) {

buffer.add(record);

}

if (buffer.size() >= minBatchSize) {

System.out.println(buffer.size());

consumer.commitSync();

buffer.clear();

}

}

}

private static void example3() throws Exception{

KafkaConsumer<String, String> consumer = new KafkaConsumer<String, String>(getProperties());

consumer.subscribe(Arrays.asList("JayJayJay"));

try {

while(true) {

ConsumerRecords<String, String> records = consumer.poll(Long.MAX_VALUE);

for (TopicPartition partition : records.partitions()) {

List<ConsumerRecord<String, String>> partitionRecords = records.records(partition);

for (ConsumerRecord<String, String> record : partitionRecords) {

System.out.println(record.offset() + ": " + record.value());

}

long lastOffset = partitionRecords.get(partitionRecords.size() - 1).offset();

consumer.commitSync(Collections.singletonMap(partition, new OffsetAndMetadata(lastOffset + 1)));

}

}

} catch (Exception e){

e.printStackTrace();

} finally {

consumer.close();

}

}

public static void main(String[] args) throws Exception{

example3();

}

}

说明

-

在Consumer中使用了组(Group),每个组下可以有多个Consumenr,但是group.name需要不同,对于订阅了Topic的组,该组中只会有一个Consumer接收并处理某一条Message。

-

可以将修改Consumer代码中的group.name并启动以达到在一个group中启动多个consumer的目的,还可以修改group.id来启动多个组,每组有不同的consumer,总之启动一个进程就是一个consumer。

-

启动consumer后可以运行producer来生产Messages,每个组的Consumer处理的Message集合就是所有的Message,但是组内consumer处理的Message都不一样。

-

如果多个组订阅同一个Topic,该Topic下的所有消息每个组都会收到。